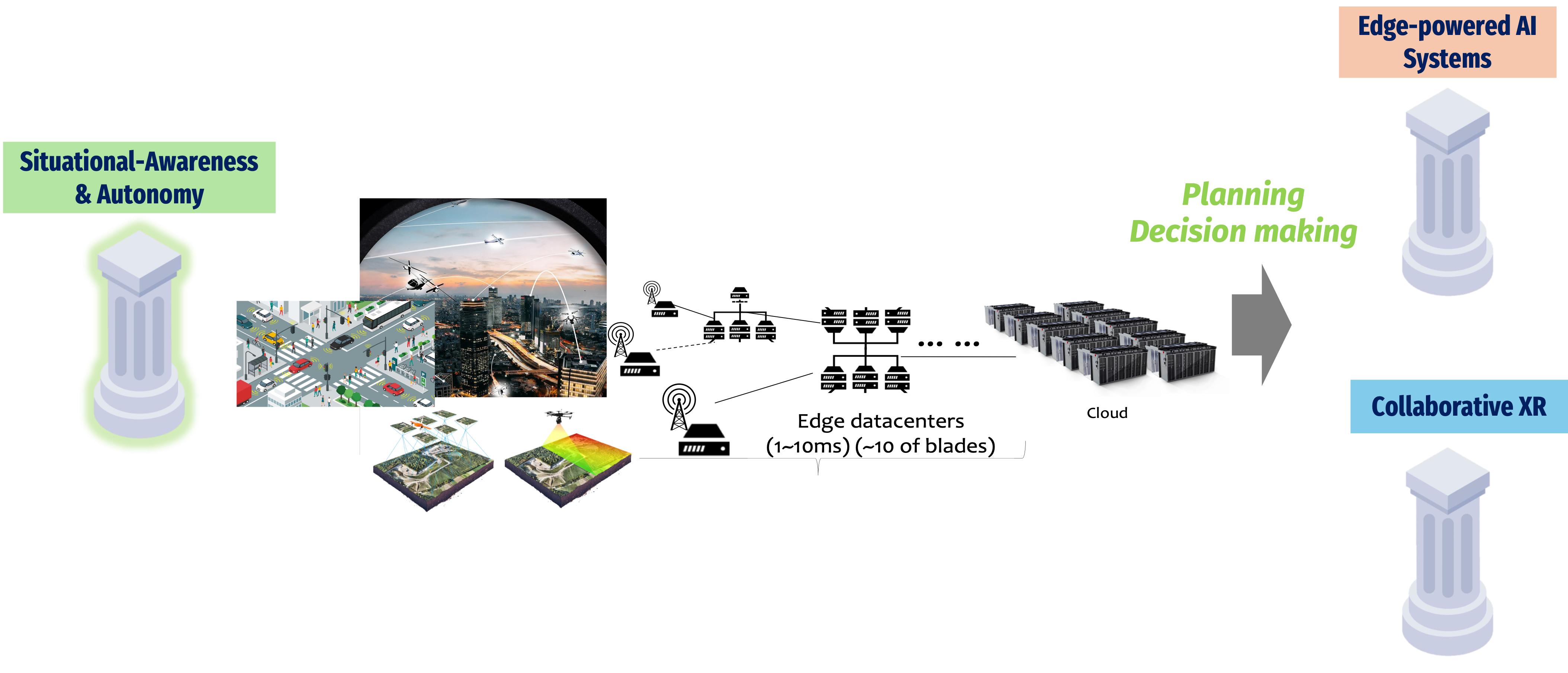

Bring collaborative situational awareness and autonomy to the edge

The goal of this pillar is to create an end-to-end system support for collaborative multi-agent sensing and situational-awareness in dynamic and potentially contested environments. This includes sharing local heterogenous sensing (e.g., camera feeds, LiDAR 3D point clouds, mm-wave radars) from each agent, as well as real-time use of available edge-computation. We will focus on two applications: multi-drone search and target tracking, and collaborative sensing in Connected and Automated/Autonomous Vehicles (CAVs). While the research in this pillar will focus on accuracy of situational awareness at each drone/vehicle in contested and uncertain environments without providing control and adaptation performance guarantees, the tight integration with the Autonomy pillar will result in edge-based assured autonomy.